Sophisticated human biomechanics from smartphone video

With synchronous video from a pair of smartphones, engineers at Stanford have created an open-source motion-capture app that democratizes the once-exclusive science of human movement – at 1% of the cost.

| OpenCap: Sophisticated human biomechanics from smartphone video. Kurt Hickman, Director for Visual Media, Stanford. Youtube Oct 19, 2023 |

By Andrew Myers, Stanford News October 19, 2023

Diseases like arthritis and sports injuries can impair the way people move and engage in life. Computational musculoskeletal analysis can inform better interventions and improve rehab decisions for patients and athletes, but measuring the dynamics and forces at play in human movement requires a lot of time, equipment, and expertise. It’s expensive. Though millions across the world might benefit, too often computational motion research is a luxury few patients can afford.

In a new study published Oct. 19 in PLOS Computational Biology, a team of researchers at Stanford University introduces OpenCap, a powerful open-source motion-capture application that uses video from two calibrated iPhones working in tandem to quantify human motion and the underlying forces in the musculoskeletal system. Its creators hope it will become a turning point in human movement analysis, helping to identify movement patterns that increase an athlete’s risk of injury or to optimize treatments for individuals with mobility-limiting conditions. OpenCap takes just minutes to compute valuable insights about human movement that used to take days using labs costing $150,000 – all at less than 1% of the cost.

“OpenCap democratizes human movement analysis,” said Scott Delp, professor of bioengineering and of mechanical engineering and director of the Wu Tsai Human Performance Alliance, the study’s senior author. “We hope it can put these once-out-of-reach tools in more hands than ever before.”

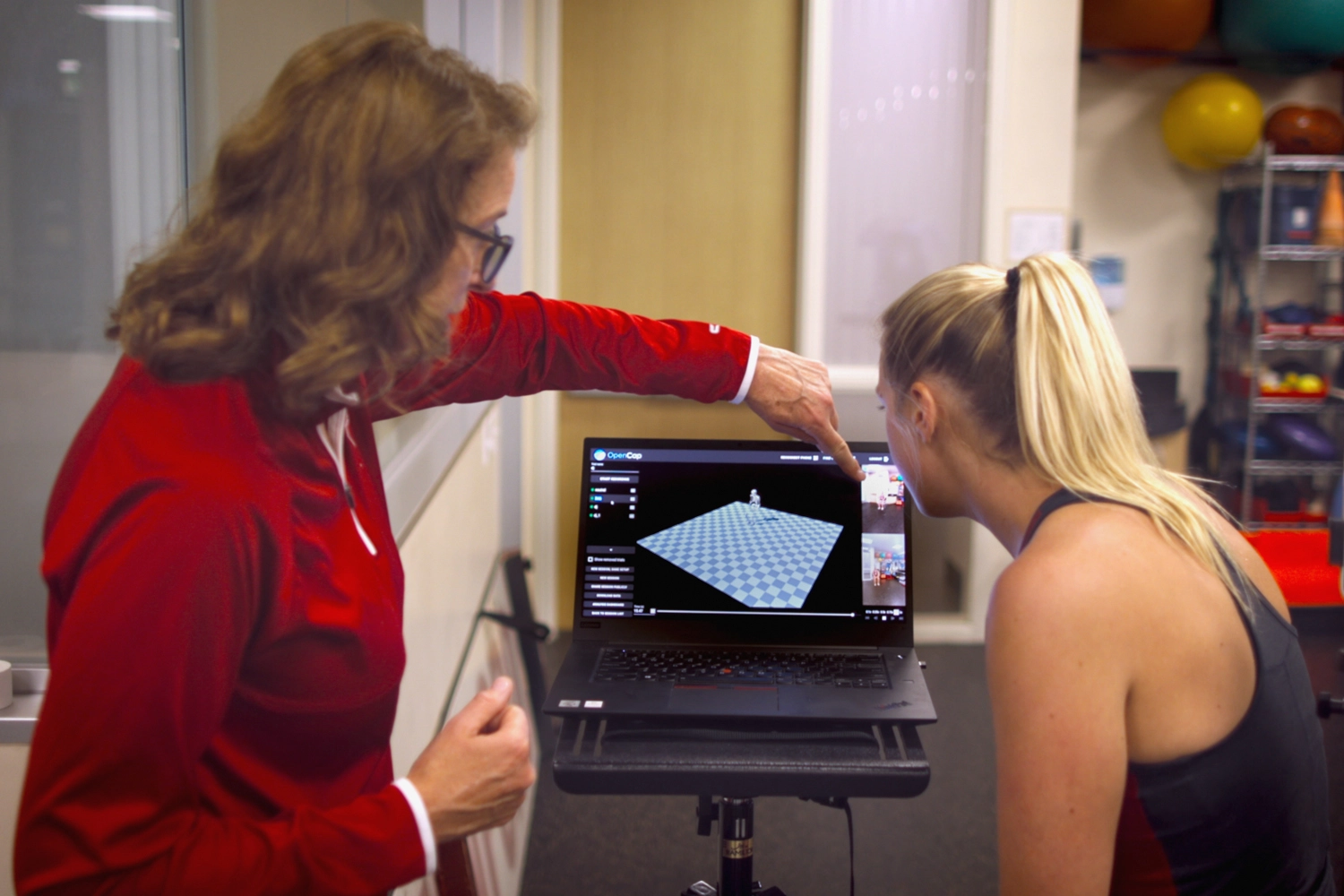

Occupational therapist Julie Muccini reviews an OpenCap capture with a study subject. Image credit: Kurt Hickman

| Movement at scale |

OpenCap combines the latest in computer vision, machine learning, and musculoskeletal simulation to make movement analysis widely available without specialized equipment or expertise.

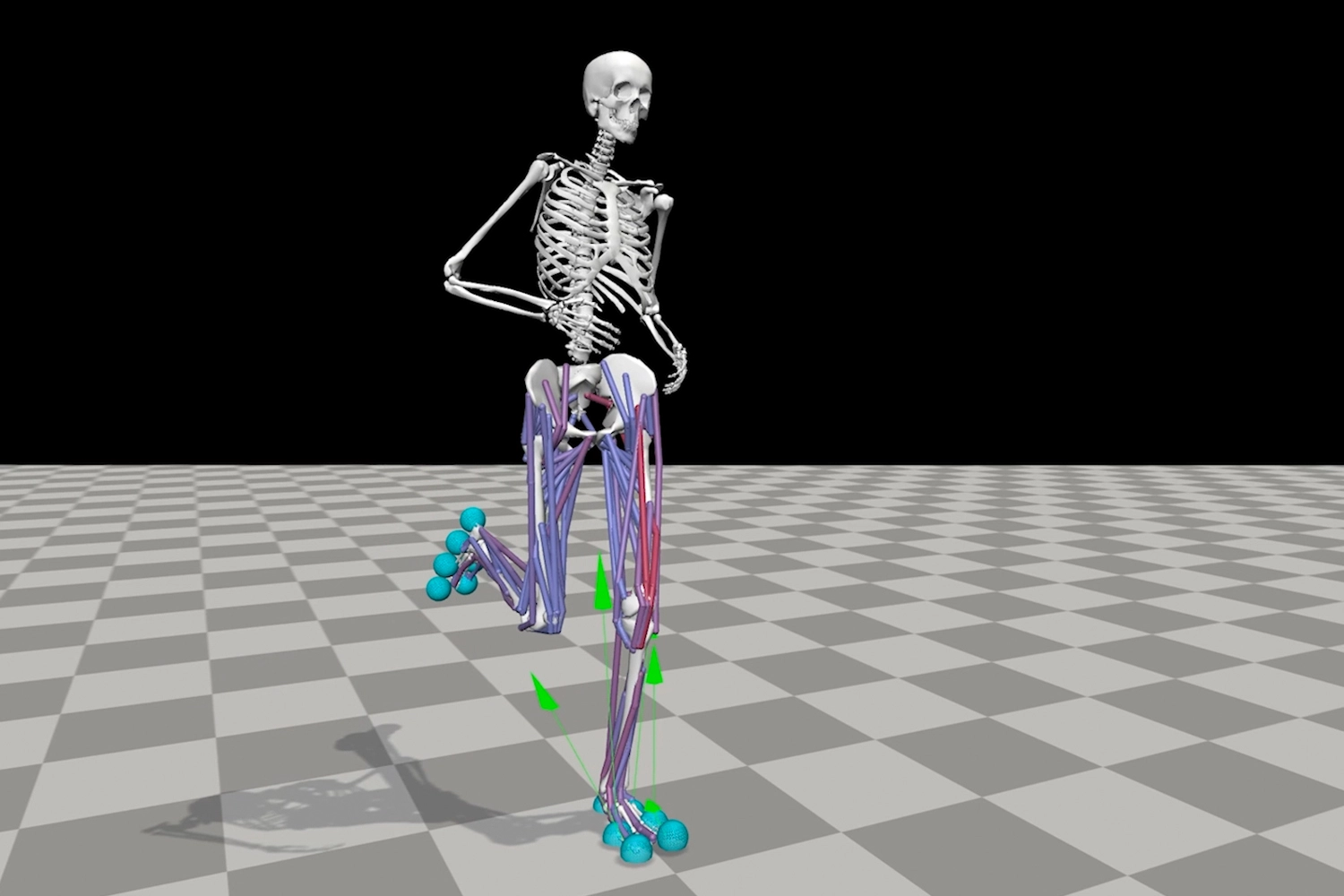

The tool computes how body landmarks – knees, hips, shoulders, and other joints – move through three-dimensional space. It then uses complex models of the physics and biology of the human musculoskeletal system to determine how the skeleton is moving and what forces are being applied during movement. From there, it can calculate important biomechanical data such as joint angles or joint loads.

“It will even tell you which specific muscles are being activated,” Delp noted.

In total, OpenCap provides a rich characterization of how humans move – information previously only available in specialized labs with proprietary equipment. While cost was a concern, the real bottleneck was the time and expertise required to do these in-lab assessments.

“It would take an expert engineer days to collect and process the biomechanical data that OpenCap provides in minutes,” said Scott Uhlrich, director of research in Stanford’s Human Performance Lab and co-first author of the paper.

An OpenCap data collection takes 10 minutes, and the processing is automated in the cloud. Stanford makes this cloud platform freely available for researchers, dramatically expanding who can study movement, where it can be studied, and how large these studies can be.

“The average number of patients in a biomechanics study today is just fourteen,” said Uhlrich. “In our study, we collected data from 100 individuals in less than 10 hours – this would have previously taken us a year.”

Delp’s team showcased OpenCap at various conferences attended by biomedical researchers to enthusiastic reviews. Already, thousands of researchers have begun to put OpenCap into practice. “It’s very rewarding when people are adopting and using your work and answering research questions you never imagined,” said Antoine Falisse, research engineer and co-first author with Uhlrich and former postdoc Łukasz Kidziński.

Example of what OpenCap reveals to users, including underlying forces in the musculoskeletal system that result from movement. Image credit: OpenCap

| Moving to the future |

Making it easy to create large movement datasets will usher in a new era of biomechanics research, powered by artificial intelligence. “There is the human genome,” said Delp, “but this is really going to be the motion-nome of the whole repertoire of human motion captured quantitatively.”

“With OpenCap, we can now shift our focus from collecting data to utilizing data for many applications like understanding peak performance, screening for disease risk, and assessing treatment efficacy,” said Falisse.

While the software is freely available for research, Uhlrich and Falisse have started a company to support coaches or clinicians who want to leverage the software for commercial applications. “You can imagine athletes getting regular performance and injury risk assessments to guide their training,” said Uhlrich, “or older adults having their walking regularly analyzed at the doctor’s office.”

“Our hope is that in democratizing access to human movement analysis, OpenCap will accelerate the incorporation of key biomechanical metrics into more and more studies, trials, and clinical practices to improve outcomes for patients across the world,” Delp concluded.

| Contributing researchers include occupational therapist Julie Muccini, biostatistician Michael Ko, radiology Assistant Professor (Research) Akshay Chaudhari, and Jennifer Hicks, executive director of the Wu Tsai Human Performance Alliance. The research was funded by the Wu Tsai Human Performance Alliance and the National Institutes of Health. |

| Media Contact: Jill Wu, Stanford School of Engineering: (386) 383-6061, jillwu@stanford.edu |

Source Stanford News

| References |

OpenCap: Human movement dynamics from smartphone videos, Uhlrich SD, Falisse A, Kidziński Ł, Muccini J, Ko M, Chaudhari AS, Hicks JL, Delp SL. (2023) PLoS Comput Biol 19(10): e1011462. https://doi.org/10.1371/journal.pcbi.1011462. Full text

| OpenCap is a software package developed at Stanford University to estimate the dynamics of human movement from smartphone video. To learn more, visit opencap.ai. OpenCap. YouTube Jul 7, 2022 |